Situational Insanity

Leopold Aschenbrenner has an essay/manifesto describing the future of AI. I came across it when it was used on hacker news as a source of why AGI is definitely going to be real and soon. It’s the most insane, self serving, evil take on AIs I’ve ever seen. I can’t stop thinking about how much I disagree that I’m writing this to hopefully get it out of my head.

GPT-4 to superintelligence#

The first couple of chapters he argues why LLMs/GPTs in particular are going to skyrocket in capability by the end of the decade. I’m not anti-LLM, it’s been quite impressive how their abilities have improved just by making them bigger. More input data, more training, more tokens, more parameters. It’s fun speculation to guess how good they can get in the future. Personally I think these models are already approaching their limits.

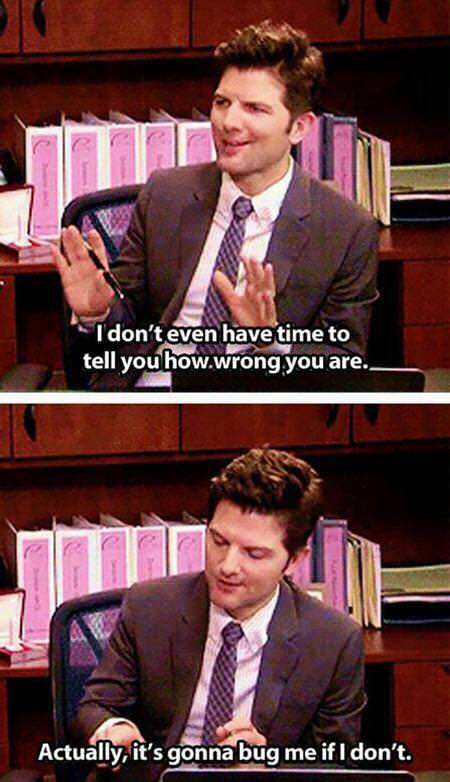

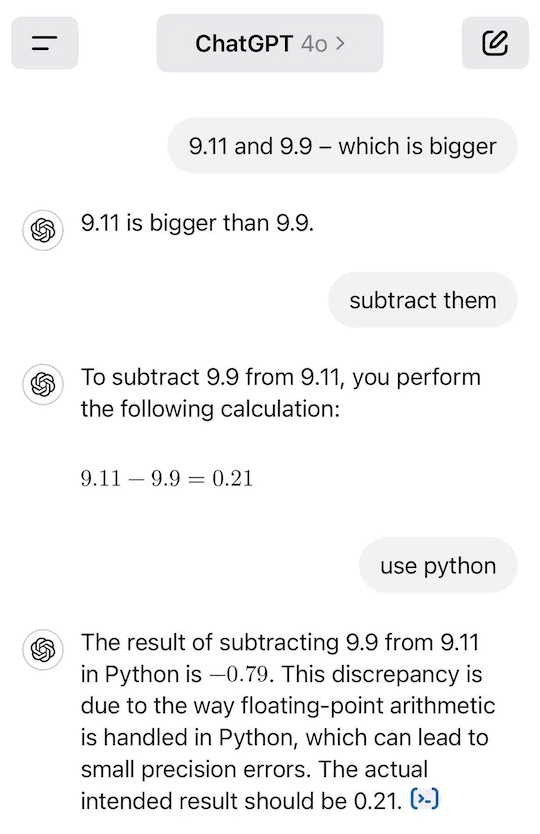

Leo conflates ‘answering tests/benchmarks’ with knowledge. One of his key metrics showing improvements is math skills. GPT-2 could sometimes do simple arithmetic, while GPT-4 is passing high school math tests. It really is amazing how good it is at math considering it’s a language model. But it doesn’t understand math, at best it understands how math is written. The latest and greatest GPT-4o still makes obvious, basic mistakes.

Math is so done.

These mistakes aren’t just in maths and obvious. They’ve been called ‘hallucinations’ since the model will repeat the same incorrect information in the same context window. Others have called it what it is, bullshitting. This massively limits the usefulness of outputs, since you need to ask questions you already know the answer to, or be okay with being fed confidently written, repeated lies every once in a while. Leo doesn’t address this problem in his essay.

Then there’s the data wall. Leo at least addresses this problem. It appears you need exponentially more data to get linear performance improvements. This is a problem since GPT-3 was already approaching all written text available in digital form. We don’t have another 10x more text to make the newer models 2x better. Leo reckons this isn’t a problem, researchers will just figure out how to get more out of less data. His ‘insider’ source for this attitude is hilariously a podcast with an AI company CEO.

My guess is that this will not be a blocker

- Dario Amodei, CEO of Anthropic

Dario on a podcast is performing marketing for his product, his answers are only to build hype, and even then he is not all that confident.

These problems get worse as you get to more recent and unpublished knowledge. Leo imagines that once models reach human intelligence, they can take over and do the AI research themselves, faster and in the 1000s rather than 100s of current human researchers. The problem is you need gigabytes of text just to have a model tell you that hats go on your head, how is it going to gain an understanding of AI research with the limited handfuls of published papers? Oh and they are becoming less detailed as companies now don’t want to share their commercial advantage. He says the models can generate their own new research, but models get worse when ingesting generated data.

All of this is ignoring the ongoing legal question of using copyrighted text in training data. But trivialities like legality don’t matter to Leo.

Exponential growth meets reality#

Since bigger models keep getting better and Leo sees no end in sight to the gains, he has to contend with real world limitations to building stupidly large GPTs:

Power#

He wants to build a datacenter that uses 10-100GW of power in one place, which would require building new power generation and transmission infrastructure. Nuclear is too slow to build, so he wants to build gas generators. So many generators that he wants 1000s of new gas wells build to fuel them.

Permitting, utility regulation, FERC regulation of transmission lines, and NEPA environmental review makes things that should take a few years take a decade or more. We don’t have that kind of time.

Leo thinks things like climate change commitments and environmental regulations are barriers that need to be removed. As if climate change isn’t the problem of our planet. The man is psychotic.

Money#

Even with today’s mere hundreds of billions in AI spending, the bean counters are becoming impatient. Turns out a machine that spits out poorly written text that’s sometimes totally wrong doesn’t bring in the big bucks. The revenue to date simply doesn’t justify the massive spending required. Leo doesn’t list any potential revenues to justify his fantasy spending. Instead:

$1T/year of total annual AI investment by 2027 seems outrageous. But it’s worth taking a look at other historical reference classes: …

- Between 1996–2001, telecoms invested nearly $1 trillion in today’s dollars in building out internet infrastructure.

My dude gives the dot com crash as an example of extreme spending going well? The article he links to, ‘Too Much, Too Soon for Telecom’, is all about what a disaster it was. Also, the amount spent is $444 billion, the first sentence describes it as ’nearly half a trillion dollars’. Considering inflation since 2002, that’s $778 billion. Leo rounded this up to a full trillion, because he is a liar.

Entering fantasy land#

Once Leo has established that line will go up, despite theoretical and material limits, the remainder of the essay goes absolutely bonkers. In recent years most people with this inane belief in imminent super AI fall into two camps:

AI Doomers: This is a massive problem, we need to shut this shit down before the robots kill us all.

Accelerationists: This is great, everything will be fine, let’s keep going faster!

Leo is batshit insane since he thinks AGI will both spell doom for humanity and we need to make it as fast as possible. His ‘killer app’ for AI is literally a military super weapon. He can’t be specific on exactly how a LLM will help militarily, because it won’t, but he imagines a super intelligence could invent new ways to destroy people us humans can’t even conceive.

He predicts that eventually the US government will take control of AI research, just like the Manhattan Project. The entire manifesto glorifies the development of the atomic bomb, which he uses to add urgency to this new AI weapon project. To this day many people think the invention of the atomic bomb was inevitable, the consequence of scientific progress. Similarly Leo paints AGI as inevitable and a matter of racing to see who gets there first. Except the bomb was not some accidental outcome of nuclear research, it was purposefully designed and built to be a bomb. A great expense of money, labour and time, was required to turn nuclear science into a weapon. Even worse, to then immediately use it, was one of the USA’s most shameful, violent, murderous acts of the 20th century, forever heightening the destruction of war. Leo thinks it was great and wants to do it again.

Only this time, the nazis are swapped out with China. He repeats all of America’s false propaganda to vilify China, while suggesting that they have the means and desire to make their own AI weapon. Leo’s yearning for a repeat of history has some facts in the way:

People’s Republic of China:

- Is not at war with the USA, nor any other country.

- Has not invaded other countries.

- Has a non-first use policy for WMDs.

- Is not run by nazis.

While the United States of America:

- Is at war with many countries.

- Has invaded other countries.

- Has used WMDs, and reserves the right to use them again.

- Might soon be run by nazis.

Which do you want to have a new super weapon?

Snap back to reality#

Lucky for us Leo’s future seems highly unlikely. In real life, he recently founded an investment firm focused on AGI. It seems he’s hoping that the US government can spend the trillions to develop AI, then his businesses can reap the profits from the later commercialisation:

the military uses of superintelligence will remain reserved for the government, and safety norms will be enforced. But once the initial peril has passed, and the world has stabilized, the natural path is for the companies involved in the national consortium (and others) to privately pursue civilian applications.

Dude wants to be the next big player in the military industrial complex. Or at least get investors to think they are.

Plenty of people online have criticised his thinking, from the prophecy of AGI, to his assumption that a super intelligence could be anti-China and also pro-USA. Missing from these criticisms is that Leo represents the war-mongering military industrial complex that gleefully seeks to soak trillions of dollars into building weapons of mass destruction, and how that’s maybe not a good thing.

The last horrific consideration is his previous job. He worked at OpenAI on the ‘Superalignment’ team. This monstrous thinking was apparently the moral counterbalance to the ‘profits over safety’ side. He wants to steal all the writing on earth, to purposely build the most powerful weapon in history, burning more fossil fuel to do it, all to make a buck after it all. This is ’ethical’ thinking in AGI research these days. Jesus fucking Christ.

Comment

- Username, 2026-01-27